“We have a variety of use cases that have proven themselves out, and we continue to iterate with them. We’re in the dawn of a period where we use these tools at scale,” he declared.

That such a global insurance behemoth has made public its intention to embrace AI at scale, is seen by many as a gold seal endorsement of the technology. Furthermore, Artificial Intelligence (AI) is nothing new. It has been in use in the business world for at least two decades via its less adventurous older brother, Machine Learning (ML). It has already proved its worth by driving automation and streamlining data aggregation to bring order to formerly high-energy and manual tasks that were subject to human errors.

Generative AI spells a new beginning for the technology

But now, as ChatGPT and Google’s Bard begin to pervade all areas of business – and prove themselves valuable, if rather uncontrolled commodities, Generative AI is receiving a lot more attention. To date, over 1000 tools utilising Generative AI, which can be applied to across numerous industries, have been launched.

The swift adoption and use of this technology, which remains largely unregulated, has resulted in many technologists sounding the alarm bells.

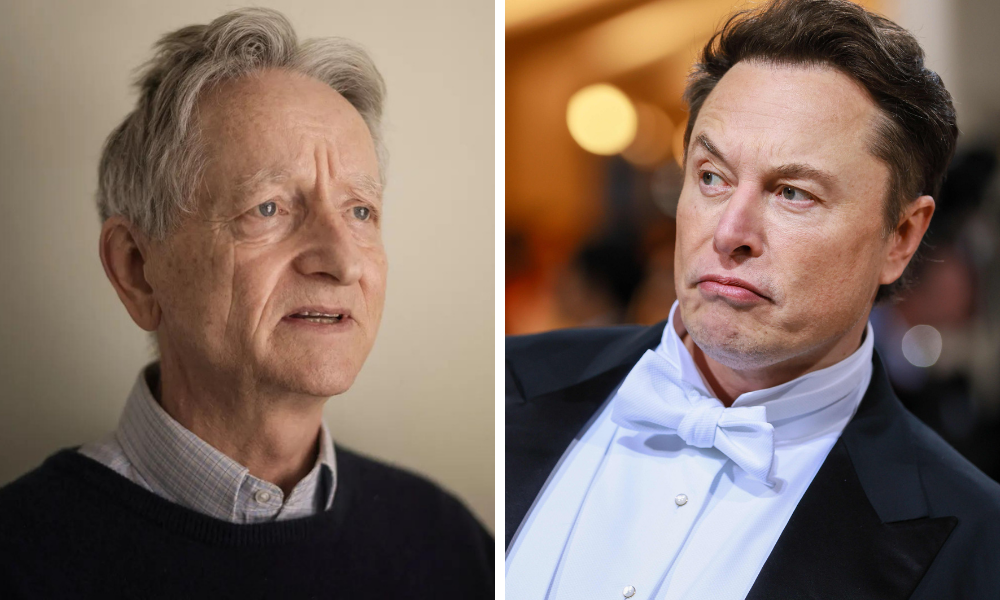

Elon Musk, who’s own brilliant explorations have driven considerable advancements in Generative AI, has, perversely, been voicing concern for years about its dangers. Musk has gathered much support in the technology community, and even recently issued a statement signed by over 1000 experts, citing their concerns about the pace of development in AI, and the potential consequences.

Equally, 75-year-old Dr Geoffrey Hinton, unofficially named, ‘The Godfather of AI’ as a result of his years of research and dedication to the cause, recently announced his shock resignation from Google in a statement to the New York Times. Alarmingly, Hinton says he now regrets his work and told the awaiting press that some of the dangers posed by AI chatbots were considerable.

It’s an uncomfortable proposition, when the scientists who have dedicated their lives to the advancement of their craft, shout ‘Fire’, because that leaves the rest of us, who trusted the technologists at the top, wondering what we should do moving forward. But comprehension and understanding of AI’s current and potential use cases and new regulations go a long way towards calming the panic.

New AI regulations are already being implemented

For example, the EU Parliament has already passed a new set of regulations for Generative AI. According to reports, the AI act passed by “a very large majority” in its first key vote with the European Parliament’s Civil Liberties and Internal Market committee. The plenary adoption of the act is expected to be decided on in June, before it is negotiated within the EU council and commission.

European Parliament deputy Svenja Hahn said in April: “Against conservative wishes for more surveillance and leftist fantasies of over-regulation, parliament found a solid compromise that would regulate AI proportionately, protect citizens’ rights, as well as foster innovation and boost the economy.”

The US has taken a slightly different approach. Laws to protect employees against AI bias were implemented in January 2023, and a more stringent examination of Open AI tools, such as Bard and ChatGPT, with a view to implementing further regulations, began early last month.

What’s the difference between Narrow AI and Generative AI?

Until recently, the only AI that has been used in any kind of widespread fashion, is Narrow AI. It is impressively efficient and has taken the pain points out of a number of boring, labour-intensive tasks – especially in the insurance space.

Meanwhile, Generative AI has an ‘across the board’ ability, which means it can be used openly, by everyone, for endless purposes. It learns with every task it completes. It is not limited to a singular set of task rails, like Narrow AI. And because it’s so new, the speed at which it will develop and learn is exponential and cannot be predicted.

“I think a distinction must be drawn between the push towards Artificial General Intelligence and today’s use of Narrow AI. ChatGPT is a milestone on the path to the former, and it has caused a sharp intake of breath. However, Narrow AI has proven its worth, doing a lot of the unglamorous heavy lifting in the insurance industry today.”

Paul Donnelly, EVP EMEA, FINEOS

However, recently, Pravina Ladva, Swiss Re’s CTO, wrote that even the latest advances in AI are not strictly “Generative” yet. She said: “Current insurance AI applications are based on a narrow type of Artificial Intelligence. AI refers to mathematical models that learn patterns from data and enable faster or even automated decisions.”

Ladva continued: “No true ‘general-AI’ exists yet, but recent advances partly begin to exceed capabilities associated with Narrow AI (e.g., Open AI’s ChatGPT and GPT-4 or Google’s Bard).”

Paul Donnelly, Executive Vice President EMEA, at FINEOS, also points out this differentiation, but says the potential of Generative AI has taken things to a new level. “I think a distinction must be drawn between the push towards Artificial General Intelligence and today’s use of Narrow AI. ChatGPT is a milestone on the path to the former, and it has caused a sharp intake of breath. However, Narrow AI has proven its worth, doing a lot of the unglamorous heavy lifting in the insurance industry today.”

Current AI use cases in the insurance industry

From underwriting and claims processing, to customer servicing, the insurtech industry has embraced the potential AI offers – and as a result, many tasks are fulfilled faster, more efficiently and without error.

Anthony Peake, CEO and Founder of Intelligent AI, says his team is using AI, “to automate data collection, cleaning, validations and modelling, to deliver broader and better and quicker insights, and to automate and target programmes with end-to-end clients to address risk and lower claims.”

As well as using the technology to analyse sites globally with AI and Satellite Image Analysis, cleaning addresses and geocode addresses, and model risk scoring across millions of sites, Peake says the technology has also garnered impressive results when applied to ESG evaluations. And, he says AI can be used to make the world a safer place. “I see AI augmenting roles and enabling the insurance sector to free up resources to focus on mitigating risk and advising clients, rather than simply selling policies, paying claims and analysing issues after an incident. I think this is super positive. There should never be another Grenfell Tower, as the data is/was there, and now we can be far more proactive on risk, using AI and other such tools.”

FINEOS also has several AI deployments that have proven invaluable. Donnelly explains: “As context, life insurance is a complex ecosystem of insurers, brokers, employers, employees, and supporting IT and HR systems from different providers. All have a key role to play and all provide value at different points in the value chain. However, as data moves across organisational boundaries and IT ecosystems, it invariably creates data friction.”

He continues: “AI has proven it can play a significant role to reduce this friction, and that must be in the interests of all parties… As an indication of the scale of this impact, at one FINEOS customer site alone this AI solution transformed over 30,000 group scheme files containing the data of 20,000,000 scheme members in its first two years of operation.”

Yes, AI is useful. But what about the risks?

According to Manasi Vartak, CEO of Verta – a company that specialises in AI innovation and research in relation to the insurance industry, the potential consequences of poor or misapplied Generative AI must be given careful consideration.

That means regulations implemented to protect against fraud and to uphold privacy, must be considered paramount. And there is a risk that models could also produce biased outputs.

“We also see risks and challenges associated with using Generative AI, both for insurance companies and organisations in other sectors. Insurance companies, for example, could see an increase in fraudulent claims that use images of accident damage created using generative AI.

Manasi Vartak, CEO and Founder of Verta

She says, “Generative AI is a fascinating topic, and we do see both opportunities and risks associated with it, including in the insurance industry. On the opportunity side, it has had a huge impact anywhere content creation is central. Marketing can use it to create more personalised content. Customer service can improve chatbots to deliver better information to clients. Software developers are generating code faster using generative AI.”

However, she continues, “We also see risks and challenges associated with using Generative AI, both for insurance companies and organisations in other sectors. Insurance companies, for example, could see an increase in fraudulent claims that use images of accident damage created using generative AI. There are concerns around generative models producing biased outputs if the data they are trained on itself reflects biases.”

Vartak goes on to say that there are also copyright issues still being worked out, too, since Generative AI can be used to create near-identical copies of existing products or content. Output quality can also be a challenge, since these models are prone to produce “hallucinations” or factual errors.

But, she adds, “This isn’t to say that companies shouldn’t be actively looking at how to use Generative AI. But businesses need to carefully consider the implications of using the technology and take steps to mitigate potential risks.”

What will AI mean from a company culture perspective in the insurance industry?

For the time being at least, AI will be used to enhance the workforce, rather than cull it. Whether that remains the situation though, is anyone’s guess. Peake is a firm believer in using AI as a useful tool. He maintains that it will always require human input.

He says, “We see AI augmenting (not replacing) the role of underwriters and risk engineers. Insurers spend 80% of their time doing admin (looking for data, reading lots of unstructured data and rekeying data, or making decisions with very little data), and only having 20% of their time to work with end clients to reduce risk and increase health & safety and business continuity.”

He continues, “We want insurers to turn this around to spending less than 20% of their time doing admin and spend 80% of time working with clients to reduce risk and grow their businesses, save lives and become more sustainable.”

“However, it [AI] cannot replace everything. For instance, AI cannot replace an insurance broker simply because it has trained on a vast amount of data. It cannot be a source to go to for an owner whose business has just suffered a cyber-attack and needs someone to talk to.”

Hamesh Chawla, CEO and Co-Founder of Mulberri Inc.

Hamesh Chawla, CEO and Co-founder of Mulberri Inc. who leads one of the most dynamic embedded insurtechs in the market today in the payroll, HR and CRM space, agrees. While many experts are convinced AI will lead to underwriters and actuaries losing their jobs – and even becoming obsolete, Chawla considers this an overstretch caused by media-driven panic. “ChatGPT, has gained attention recently because of its astounding capabilities and the disruption potential it depicts. AI will continue to become an integral part of our lives and businesses, and we should plan for it.

“However, it cannot replace everything. For instance, AI cannot replace an insurance broker simply because it has trained on a vast amount of data. It cannot be a source to go to for an owner whose business has just suffered a cyber-attack and needs someone to talk to.”

For Chawla, strict regulations will be key. He says they must be implemented to ensure security, privacy and a lack of bias. “If the AI is not trained well, biases and discrimination could creep back in and set us all back – not to mention legal implications for all involved. Therefore, I do strongly agree that there is a need for regulations to control AI development and deployment in general – more so in cases where there could be a significant impact on society and people’s livelihoods.”

He concludes, “Like the advent of the internet, Large Language Models (LLMs) will have a massive impact on almost all our businesses. It also needs oversight and a regulated pace until we understand what and how much the impact will be.”

Just how effective will AI regulations be?

Ultimately, each country and industry will develop their own sets of regulations, based on their continued experiences of Generative AI, and the advantages it brings. And herein lies the quandary.

Like all great advances, such as the invention of the first computer and the internet, those at the forefront of the race, regardless of the consequences, stand to gain the most.

Peake agrees, but he also points out that the AI naysayers – those that decry its potential and call it an existential threat – may have ulterior motives. He says, “I can see some potential longer term risks, but also processes to manage these risks, but I also see some of these people who are raising concerns who are heavily investing and using these concerns to delay the market to allow them to catch up, so they have personal reasons to slow down the market.”

Whatever the future holds, AI in its Generative and Narrow forms will continue to evolve at pace and transform the business world. There will be many benefits, and conversely, as in all things, there will be consequences. But failures and mistakes are inevitable in the journey towards growth, innovation and maturation.

Microsoft CEO Satya Nadella is also cautiously optimistic about AI’s future. In a recent interview he said that humans must be “unambiguously, unquestionably” in charge of powerful AI models to prevent them from going out of control. He says that this potential problem is was one that can be addressed by making models safer and “more explainable” in the first place.

Join AI experts at Insurtech Insights USA 2023

We’re deep-diving in to AI and its uses in the insurance industry at Insurtech Insights USA, 2023, from June 7th and 8th at the Javits Centre in New York. To find out more, Click here:

Keynote Speaker: Jim Fowler, Chief Technology Officer, Nationwide

Unpacking Generative AI

Speaker: Naveen Agarwal, CEO and Managing Partner, NavDots

The New Era of Underwriting: How Advanced AI is Personalizing Underwriting Like Never Before

Speakers:

Keith Raymond, Principal Analyst, Celent

David Carter, EVP, Chief Underwriting Officer, Sompo International

Scott Aiello, VP, Commercial Strategy, Simply Business

Shannon Shallcross, Head of Client Services, PinPoint Predictive

Donald Lacey, Chief Investment Officer, Ping An Voyager Partners

The Technologies Creating a Dynamic Cyber Insurance Strategy

Speakers:

Roman Itskovich, Co-Founder & CRO, At-Bay

Trent Cooksley, Co-founder & Chief Operating Officer, Cowbell

Courtney Maugé, SVP, Cyber Practice Leader, NFP

Hamesh Chawla, Co-Founder & CEO, Mulberri

Embracing Innovation: How Insurers and Big Tech Can Collaborate

Speakers:

Jeffery Williams, Director of Digital Insurance Strategy, Worldwide Financial Services, Microsoft

Robert Parisi, Head of Cyber Solutions, Munich Re Americas

Asaf Lifshitz, CEO of Sayata

Nico Stainfeld, Partner, Foundation Capital