Neil Raden: “Principles and practices for developing trustworthy AI” are everywhere. But there is a burning need to reverse that, and apply these technologies to fix longstanding inequities by identifying practices that have been accepted that violate those very principles of AI Ethics.

One that may seem innocuous enough unfairly burdens a huge swath of our community – credit rating for insurance.

I’ve spent a lot of time with actuaries. I guess that’s a funny way to start a conversation, but I was an actuary for ten years, and in the past four years, my practice included a substantial amount of time with actuaries. Actuaries have always been on the razor’s edge of risk analysis and outright unfair discrimination. Generally, when they are engaged in the latter, they have consistent talking points to explain away the unsavory things they do.

For example, women pay less for life insurance because they live longer. They pay more for health insurance because they have more frequent and severe (in insurance talk, that means expensive) claims. These old saws are not valid anymore in the world of data science and AI, where all “women” and “men” can be micro-classified with different risk profiles.

But there is one thing all insurance companies do (except those in four states where it is prohibited (Hawaii, California, Massachusetts, and Michigan): they price policies for auto, homeowners, and life insurance using factors that are boldly, disgracefully, and deliberately racist: credit scoring. Using FICO scores for auto, homeowners, and life insurance is perhaps the most blatantly racist, discriminatory, and unethical thing insurance companies habitually do.

They point to various studies conducted by consulting firms that indicate a clear relationship between a poor credit score and higher risk. There is a term for this in the new field of AI Ethics: Fairwashing, insisting on a false explanation that a machine learning model respects some ethical values when it doesn’t. Here’s a flimsy example: Insurance Information Institute: Background on Credit Scoring.

The reason for the predictive power of insurance scores is up for debate. But why are insurance scores so predictive? One possible answer is behavioral. Supposedly, people who manage their finances well tend to also manage other important aspects of their lives responsibly (emphasis mine), such as driving a car. People who manage money carefully may be more likely to have their vehicle serviced at appropriate times and more effectively manage the most important financial asset most Americans own – their house-making routine includes repairs, before they become significant insurance losses.

Every time I hear this excuse, it makes my blood boil. There is always a pattern of discriminatory practices that are the root cause of the poor credit histories. The 1974 Equal Credit Opportunity Act barred credit-score systems from using information such as sex, marital status, national origin, religion – or race and credit scoring was supposed to eliminate bias. A 2018 study by the National Community Reinvestment Coalition found that “while overt redlining is illegal today, having been prohibited under the Fair Housing Act of 1968, its enduring effect is still evident in the structure of U.S. cities.” Access to credit is “an underpinning of economic inclusion and wealth-building in the U.S.”

“People who manage money carefully” is a classifier for middle and high-income (white) people. If you are managing a household and working two low-paying jobs, I’d suggest you manage money better than anyone. But when you live on the razor’s edge, the slightest unexpected expense is going to affect paying your bills on time. How, other than some shaky statistical models, does that raise your risk profile? Do poor people have low FICO scores because they don’t “manage money carefully?” No, they have low FICO scores because they don’t have enough money, and it is disgraceful that insurance companies charge them double or triple or more for car insurance, even if they have a good driving record.

Debt-collection lawsuits that end in default judgments also disproportionately go against “protected classes,” according to a 2020 Pew Charitable Trusts report. What happens next creates a negative-credit snowball. A crisis hits, and “protected class” borrowers can’t pay their debts. They are already more likely to be underpaid. Reports have shown creditors are more likely to sue working-poor borrowers. “Debt collection lawsuits that end in default judgment can have lasting consequences for consumers’ economic stability,” the Pew report said, adding, “Over the long term, these consequences can impede people’s ability to secure housing, credit, and employment.”

First of all, “predictive” is an inadequate excuse for something discriminatory and harmful to people of color and protected groups. Any statistician can tell you that the path between dependent and independent variables is often littered with overlooked confounding variables. Is scurvy caused by not eating citrus fruits? No, it’s caused by a lack of vitamin C.

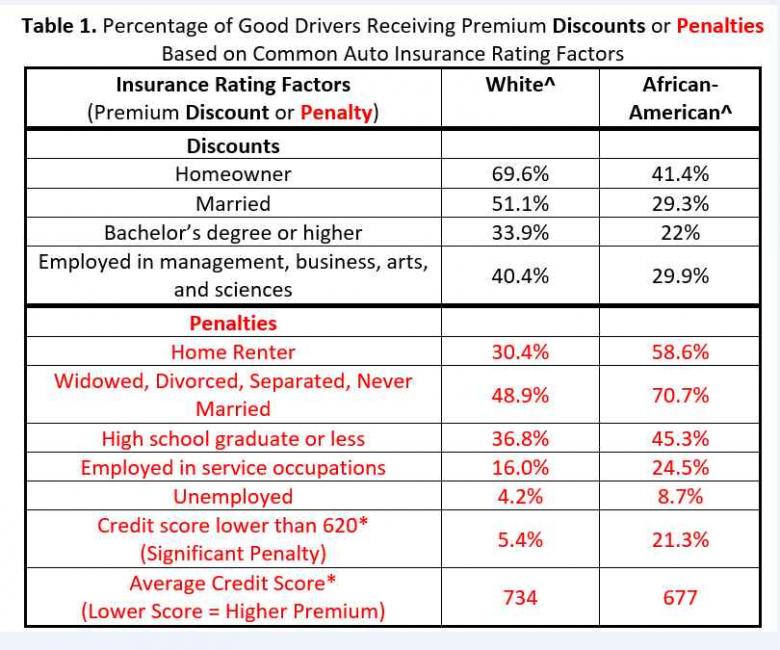

In a 2015 report, CFA found that ZIP codes with predominantly African American residents face premiums that are 60% higher than most white ZIP codes after adjusting for population density. Taken all together, it is clear that African Americans will pay more for auto insurance than white drivers, even when everything related to driving safety and vehicle type is held constant:

I spoke with the Chief Actuary of the New Mexico Department of Insurance, and she explained it this way: New Mexico is the fifth-largest state in the U.S. but has only two million people, almost half of whom are poor. Auto insurance is mandatory and expensive, almost like a regressive tax. When I get a new rate filing from an insurance company, the first thing I think is, “Is this fair?'” Her department’s biggest problem is that the pricing models have become more technical in AI, to the point where it’s hard to decipher the key drivers. See my recent article on the problem of algorithmic opacity.

The Governor of the State of Washington recently pushed a bill into the legislature to eliminate credit scoring in insurance, not completely, but it’s an excellent first step. The legislature is rejecting it without even considering it. As I mentioned, some states -including California, Hawaii, Massachusetts, and Michigan – strictly limit or entirely prohibit insurance companies’ use of credit information in determining auto insurance rates. In these states, your credit score won’t affect your insurance rates, no matter how good or bad it is.

My take

Systemic racism is so pervasive that it even affects auto insurance rates. Of all the other situational barriers that African Americans and other people of color and all of the working poor of any ethnic background face daily, this is one thing that insurance companies could easily find a solution for, especially now with AI models that can get to root cause analysis.

Source: Tech Crunch

Share this article: